Microsoft has staked a claim in the growing field of “media literacy” and “digital literacy,” which aims to instruct members of the public – especially schoolchildren – in what types of digital media they ought to trust and distrust. As FFO has previously reported, media and digital literacy is the latest in a long string of pretexts by the ideologically biased censorship industry to prevent the public from accessing disfavored information sources.

Read MoreTag: deepfakes

Minnesota Legislator Files Suit to Dismiss Law That Criminalizes Sharing AI-Generated Election Memes

State Rep. Mary Franson, R-Alexandria, has filed a federal lawsuit seeking to strike down a Minnesota law that can criminalize the sharing of AI-generated election memes.

In 2023, Democrats and Republicans in Minnesota passed a bill, HF 1370, which regulated AI-generated content in Minnesota.

Read MoreCommentary: Deepfakes, Disinformation, Social Engineering, and Artificial Intelligence in the 2024 Election

Artificial intelligence (AI) and its integration within various sectors is moving at a speed that couldn’t have been imagined just a few years ago. As a result, the United States now stands on the brink of a new era of cybersecurity challenges. With AI technologies becoming increasingly sophisticated, the potential for their exploitation by malicious actors grows exponentially.

Because of this evolving threat, government agencies like the Department of Homeland Security (DHS) and the Cybersecurity and Infrastructure Security Agency (CISA), alongside private sector entities, must urgently work to harden America’s defenses to account for any soft spots that may be exploited. Failure to do so could have dire consequences on a multitude of levels, especially as we approach the upcoming U.S. presidential election, which is likely to be the first to contend with the profound implications of AI-driven cyber warfare.

Read MoreThe Senate’s ‘No Section 230 Immunity for AI Act’ Would Exclude Artificial Intelligence Developers’ Liability Under Section 230

The Senate could soon take up a bipartisan bill defining the liability protections enjoyed by artificial intelligence-generated content, which could lead to considerable impacts on online speech and the development of AI technology.

Republican Missouri Sen. Josh Hawley and Democratic Connecticut Sen. Richard Blumenthal in June introduced the No Section 230 Immunity for AI Act, which would clarify that liability protections under Section 230 of the Communications Decency Act do not apply to text and visual content created by artificial intelligence. Hawley may attempt to hold a vote on the bill in the coming weeks, his office told the Daily Caller News Foundation.

Read MoreCommentary: AI Is Coming for Art’s Soul

While AI-based technology has recently been used to summon deepfakes and create a disturbing outline for running a death camp, the ever-pervasive digital juggernaut has also been used to write books under the byline of well-known authors.

The Guardian recently reported five books appeared for sale on Amazon that were apparently written by author Jane Friedman. Only, they weren’t written by Friedman at all: They were written by AI. When Friedman submitted a claim to Amazon, Amazon said they would not remove the books because she had not trademarked her name.

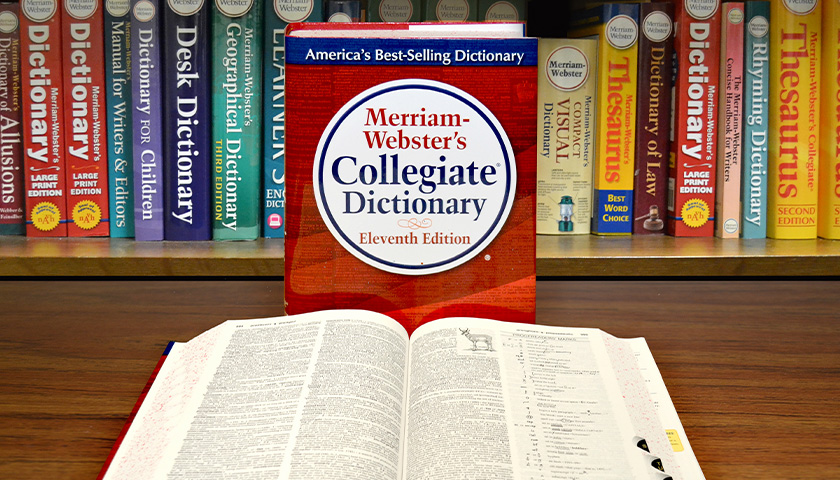

Read MoreMerriam-Webster’s 2022 Word of the Year: ‘Gaslighting’

Merriam-Webster’s word of the year for 2022 is “gaslighting,” which it defined as “the act or practice of grossly misleading someone especially for one’s own advantage.”

Read MoreFacebook Will Pay Reuters to Fact-Check the News, ‘Deepfakes’

Social media giant Facebook is partnering with the news agency Reuters to fact-check and verify news headlines, user-generated videos and photos, and other content in English and Spanish on its behalf. Adding a high-profile name to its worldwide roster of fact-checkers, Techcrunch reports.

Read MoreDetecting Deepfakes by Looking Closely Reveals a Way to Protect Against Them

by Siwei Lyu Deepfake videos are hard for untrained eyes to detect because they can be quite realistic. Whether used as personal weapons of revenge, to manipulate financial markets or to destabilize international relations, videos depicting people doing and saying things they never did or said are a fundamental threat…

Read More